Creating Synthetic Wildfires with Unreal Engine

Where there’s smoke, there’s fire

Background

From the minute I heard of the concept of synthetic data, I loved it. Probably because I’m the type of person who will spend a week automating something tedious that would’ve taken me only two days of manual labor to do it by hand. “I will re-use this script in the future, and then I’ll be happy I have it” I usually tell myself. Often true, though not always.

For those new to the term, “synthetic data” is just as it sounds – artificial data created out of thin air. For machine learning engineers, we often need a very specific dataset of labeled data that can’t be readily found online. And when that happens, it means get ready to collect – and then hand-label – a huge amount of data. It’s very tedious and also error-prone.

However, the beauty of synthetic data is that you get perfect training-ready labels automatically. No more manual labor, and the incremental cost of creating a hundred thousand more datapoints or images is mostly just computer runtime.

The first time I needed to create my own dataset was back in 2020. At that time I was working on a product that would detect individual book spines from a huge collection of books on a shelf. I needed lots of images of books on a shelf and their bounding boxes, to train my own object detector based on the YOLO algorithm.

I was pretty new to ML then, but I gave a quick spin at creating synthetic books via Python and the Pillow library directly. I attempted to draw them all helter-skelter and with lots of various colors and even angles of orientation. I used random backgrounds based on some research papers at that time (still a good idea today to prevent overfitting based on the environment).

I even had gradients, logomarks, and random spine text!

While it was a good introduction to synthetic data for me at the time, it wasn’t realistic enough to train an object detector. I did eventually get a reliable book spine detector based on YOLO working, but only after firing up the jamz and labeling real pictures of several thousand books at a Goodwill store by hand. It wasn’t fun, and at the time I remember wishing I could put realistically rendered 3D books into a virtual scene using some sort of rendering tool like Blender or something.

Detecting Wildfires

Fast forward a few years, and I’m now thinking about a different problem; detecting wildfires in forest imagery. Wildfires cause so much damage across the world every year, and require tremendous resources and risk of life to fight to put them out. It would save billions of dollars annually if you could simply detect them much earlier and put them out before they have spread far.

I talked to a guy I know who works at the county sheriff and he mentioned that currently wildfires are simply called in by people, sometimes forest watchtower spotters, but more often a casual hiker or mountain biker. They then (in our county at least), send out a pilot to the area to confirm the sighting. This could take hours and by the time a fire has been sighted, it has had a lot more time to spread and become exponentially more costly to put out.

My idea was a startup that would design solar-powered autonomous glider drones that would fly in circles 24/7 during the entire summer (wildfire season) in a grid-like pattern over national forest. It would be outfitted with high-resolution cameras and using computer vision would detect wildfires and automatically send alerts and coordinates to authorities much sooner.

It was a pretty ambitious solution. Even if each drone could cover a 10 mile x 10 mile square in its grid, you would still need thousands and thousands of these drones hovering at all times, rain or shine, day and night, over the U.S. forest alone. I did a preliminary search and it looked like not only would I be pioneering some sort of wildfire detection via image but first I would have to create a drone that could fly and charge so efficiently by day that it could fly all night via battery power alone – something that at least at the time had never been done.

It felt too audacious, and I was pursuing startups by myself, and I ended up abandoning the idea for a different startup that felt more in reach. Fast forward another couple years, and I read about a startup called Pano.ai that is doing the same thing, but with the much more realistic idea of placing stationary camera/sensors at high points all across the western Rocky Mountains. Enter the familiar yet sinking feeling of deflation when someone else has taken an idea you thought was yours farther than you ever did.. Still, congratulations to them. I felt a little better knowing that they’ve validated it was indeed a big problem to work on.

Even though they’re basically solving this problem, I still really like the problem itself. Having been studying AI and Machine Learning all this last year, I wanted a project that I could practice my skills with yet also be personally interesting to me. Hence, I decided to create synthetic images for wildfire localization as a fun project. This one is less about machine learning itself and more about sourcing the data (which is 80% of most ML projects).

Creating Synthetic Wildfires

To create synthetic images, using a video game engine such as Unreal Engine or Unity 3D is a popular option, as is using 3D modeling software, such as Blender. However, I haven’t touched Blender in forever, and doing a quick search survey, it appeared that Unreal Engine was a popular choice, hence would have more help and support via online communities. Lastly, UE has recently added a Python API that exposes almost all of the underlying functionality, which clinched my decision to use it.

I have to admit, the learning curve of UE was very steep, and I don’t think I’ve broken nearly into the depth of what it can do. But at the same time, much of what it does is irrelevant for the purposes of making synthetic images. Being first and foremost a game engine, those using this for AI will find themselves at times going against the grain, and fairly alone in their unique problems. I ran into many a dead-end thread of someone who ran into the same issue I had.

The basic concept for creating the synthetic data seems simple: place a fire or smoke column into a mountain scene, capture an image of it from some angle, and convert its points in the game world into labeled bounding boxes in image coordinates. I was able to find two relatively inexpensive assets in the Unreal Marketplace, one for the mountain scenery and one for a smoke column. I decided against trying to also draw fire for the sake of scope reduction, and since I figured that in real world systems, you probably would see smoke before you saw any actual fire.

Basic Object Manipulation in the Game

What I wanted to do seemed pretty simple, but I ran into problem after problem getting things working. It took a while just getting Python working within the environment, though a lot of that was probably because I also knew nothing about UE and was needing to learn basic UE game engine concepts.

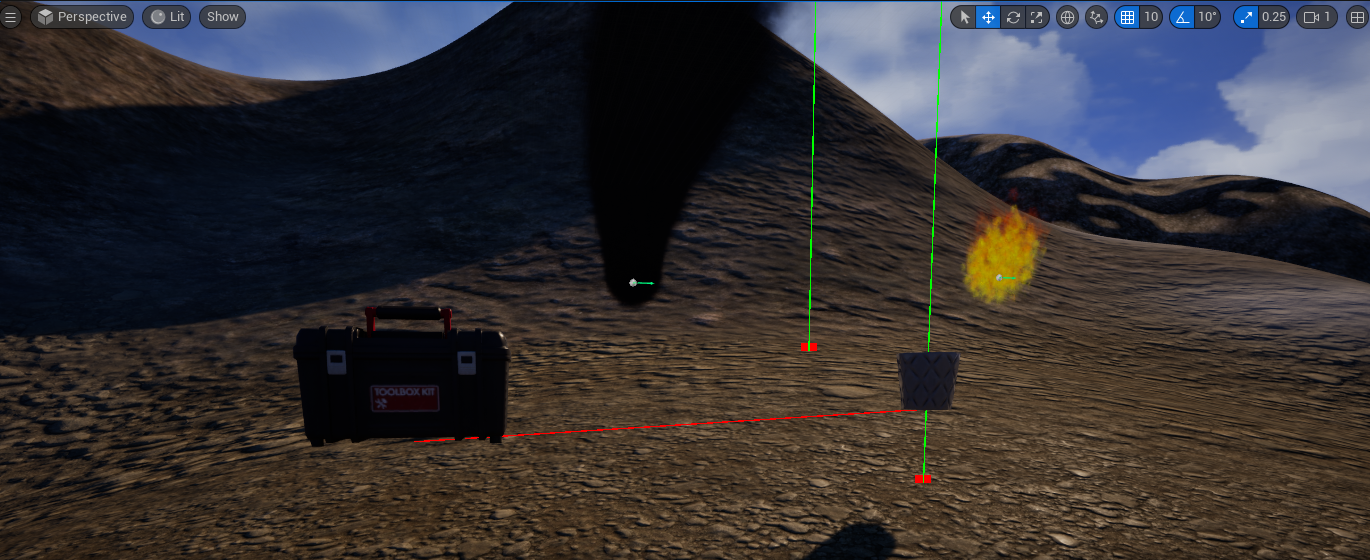

Eventually, I found myself with my purchased assets and a few random other ones for debugging correctly inserted into the world and ready for my bidding.

There’s fire, smoke, a toolbox, and a planting pot up there on yonder mountain

Once I got some objects into the world, I wrote some basic Python functions for tasks I figured would be common manipulations. For example, a common requirement is to detect if one object is visible from another object. In Unreal Engine, the camera is one such object (or “actor”) in the level and so I needed to know if the camera could see the smoke from where it was currently located. To do this, you fire a ray trace from the center of one object to the center of another and see if a hit is detected. If one object can hit the other with a ray trace, then I know it is visible via line-of-sight and isn’t occluded by a hill or some other object.

In the above image, you can also see some ray traces coming down from the sky. Since the terrain is fairly random and not uniformly flat like in some simpler applications of synthetic data, I also needed to know, at any X, Y point in the game world, what was the Z coordinate of the terrain at that point. To solve this, I cast a ray down from the heavens and compute the Z coordinate at the intersection point.

I had too much fun shooting down beams from space. For some reason this just reminds me of the ark scene from Raiders of the Lost Ark.

This is roughly the same idea of casting a ray from one object to another, except the world object is very large (8km x 8km in my case) and the centerpoint of the terrain object isn’t really relevant, so I simply cast down from a very high altitude and measure where the ray hits the ground.

Procedure for a Single Image

With these basic functions that could get a random point on earth, get a random 2nd point from within a vicinity of the 1st random point, detect if actors were visible from another actor, and move game actors to locations, I was ready to put together the basic simple algorithm to create a single image.

First I pick a random spot in the level, and move the camera to that location. For the camera object, I also add a random vertical offset to represent that the camera need not sit on the ground, but could also be up in the air, whether mounted to a pole or the top of a tree or tower.

Next, I pick a random spot in the mountains that is within some variable distance from the camera, and at that new X, Y location, I place the smoke column that represents the wildfire. The size and shape of the smoke column is always the same, though future versions of this could potentially control that. The smoke is not a static asset, however, but is dynamically generated through Unreal’s particle effects rendering engine. Unlike the camera actor, the smoke actor is always placed right on the ground with no offset.

Next, I determine if the smoke is visible from the camera’s location using another line of sight function that works via the same ray-casting principle. At this point we know that the smoke is within the correct radius from the camera, but it’s not always visible because sometimes the camera or the smoke could be stuck in a valley, or over a hill from one another. If the smoke is not visible from the camera’s location, then I generate a new random location for the smoke. I try this a variable number of times. If it fails to produce a valid point after so many tries, then it’s possible the camera is just stuck in a bad spot (e.g. that valley example) and so I move the camera to a new random spot and restart the search for a good smoke location. This can sometimes take multiple tries to find good locations (like, dozens of tries), but I’ve yet to see it not find a solution within less than a second.

Once the camera and the smoke are placed in satisfactory locations, I then compute a mathematical rotation matrix for the camera that, when applied to the camera’s current rotation, will point the camera straight towards the smoke so that it’s in the center of the screenshot. I then add a random roll, pitch, and yaw vector to the camera’s orientation to make the wildfire not exactly at the center of the screenshot (much like any good dataset, we don’t want the model to learn that wildfires are always in the middle of images). I experimentally found the amount of yaw to keep the wildfire within the camera’s field of vision or frustrum. For the pitch, there is less variation, like +/-10 degrees, as I think a real world system like Pano’s would likely set their cameras fairly level. Lastly, I kept the roll to +/-2 degrees as more just looked silly, since you’d also mount your cameras level roll-wise.

Lastly, I take a screenshot of the scene and derive the bounding box labels, which each deserve their own discussion.

Determining Bounding Boxes

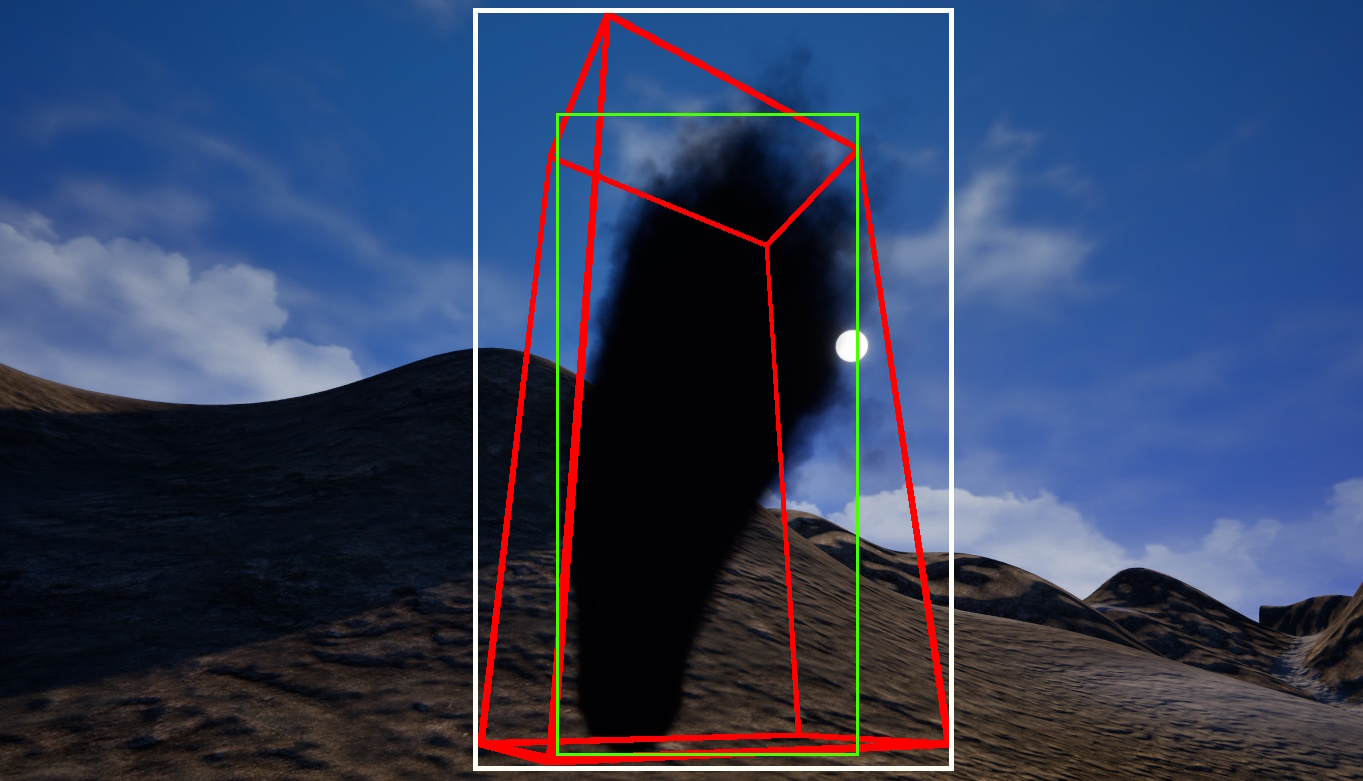

My original thought on deriving the image bounding boxes for the wildfires was that I already knew the perfect bounds of the object in 3D space, since I know the smoke centerpoint and the extent of the 3D bounding box around it. So my original plan was to find the 8 points of that 3D bounding box, project those to 2D screen space, and then find the minimum and maximum points of that 3D bounding box to get the true labels for the images.

However, that creates an accuracy problem best illustrated by the picture below.

3D bounding box (red), 2D projection of 3D box (white), actual 2D bounding box (green)

In this picture, the red bounding box captures the full smoke pillar perfectly along its 3D bounds. However, there is a lot of that volume that isn’t smoke. Usually in computer vision, the localization bounding boxes for an object are rectilinear and are the smallest bounding box that perfectly encapsulates (or covers) the object. However, we can see here that if we try to encapsulate the 3D bounding box we get the 2D rectilinear box in white, which clearly contains more region than is just smoke. A convolutional neural network trained on this won’t generalize well because its training data wouldn’t be accurate. We can see that the green box represents the best 2D rectilinear box that perfectly encapsulates most of the smoke on the screen.

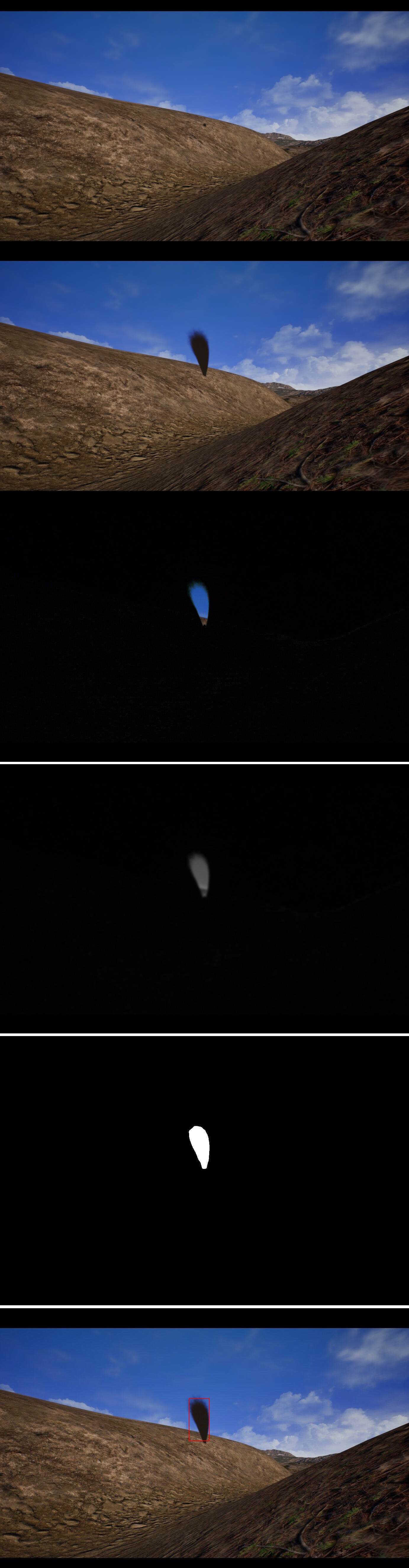

So, rather than creating the 3D red bounding box and trying to convert that to the green one (we can see there isn’t any algorithmic way to convert the two since it would depend on camera angles), we instead want to directly find the outline on a 2D screen. To do this, I essentially take one screenshot at the first animation frame of the smoke, where it is just a baby smoke. I then take another screenshot several seconds later when the emitter has been animated to its steady-state full-smoke state. By taking essentially a diff of these images, with the only change between frames being the growth of the smoke, we by definition get a pixel region that only contains the smoke pillar object. Many thanks to my friend Dan for the conversation that inspired this idea.

I then pass these images through a very standard image processing pipeline using the Pillow library and Python. OpenCV would have been another option but Pillow already comes preinstalled in the Unreal Engine Python environment, and it did what I needed. The first two images are the two snapshots, followed by the diff between them, then these are grayscaled and put through a Gaussian filter to remove noise, then a threshold sets all pixels to 0 or 1, and finally the X, Y bounds of that contour becomes the rectilinear bounding box for the wildfire. A text file is also output with the coordinate values as a label, whereas the bounding box is drawn just for human demonstration.

Baby smoke, Adult smoke, Diff, Grayscale + Gaussian, Thresholded, 2D Bounds Labeled

To make this work where nothing else changes between frames, it is critical to turn off dynamic lighting, shadows, set cloud speeds to 0, set atmospheric fog effects to non-animated, etc. Even then, there was some initial land shading that differed between the two frames that I couldn’t completely eliminate (you can see this shader noise in the diff image), but it’s cleaned up later by the Gaussian filter and thresholding.

Asynchronous Snapshots and Further Work

One issue I had was the high-res screenshot library function provided by Unreal Engine works asynchronously. That is, it is non-blocking to the rest of my Python script. All outstanding Python code is executed for any current game engine frame or ‘tick’. I’m sure this is great for people using this for game effects, where you wouldn’t want the game to freeze while some function is running, but for me this is definitely undesired. My script works well to get one image, but if you were to run it in a loop several times, each of those snapshots would be instantaneously queued, the Python code would then move the camera and smoke to a new location, then queue another snapshot, up to the number of desired training images. But the final snapshots would just all be at that final location, not the location the objects were in when the snapshot was enqueued. You’d loop to get, say, 10,000 images, and end up with just one.

The solution to this is to tie my Python code into some sort of asynchronous callback that runs on each game engine tick. However, I felt this was out of scope for this project and it’s something I’ll end up resolving the next time I make a dataset. This personal project was already quite challenging as it is – getting up and running with a brand new and quite complex tool like Unreal Engine, buying and instantiating the assets, learning to modify the particle-effect smoke asset, learning to place actors in scenes, move them, ray-cast between them, the issues I had with 3D bounding boxes, and finally even enqueuing the asynchronous snapshots – all added headaches. Ultimately I decided this was enough scope for my first stab at it.

Conclusion

I still think synthetic image datasets are the bees knees. As someone who loves automation and hates manual labor, the appeal of “writing this once” and then going on a coffee walk while my disk fills up with random perfectly-labeled images is quite appealing. However, I found actually doing this was much more difficult than I imagined it’d be conceptually. If you attempt this, be prepared to raise many old unanswered forum threads from the dead and to be reading a lot of the Unreal Python API documentation. It is a road not well-traveled, but still worth the work in my opinion for the results you can get. And with game engine graphics getting more and more realistic all the time, this option will only get more viable in the future.

I wish Pano.ai well, but who knows, maybe my next version of this script will be creating a birds-eye view of wildfires for my autonomous glider drone solution instead..